The adoption of generative AI has been hindered by concerns regarding data security, privacy, and control. Salesforce’s Einstein GPT Trust Layer solution is designed to empower enterprise companies with trusted generative AI capabilities while ensuring data integrity and compliance.

The Data Dilemma

The advent of large language models (LLMs) has ushered in unprecedented capabilities in natural language understanding and generation. Yet, as powerful as these models are, concerns about data privacy and unauthorized access have lingered. When data is fed into an LLM, there has been limited control over how that data is processed, and the potential risk of sensitive information finding its way into generated content has caused apprehension among enterprises.

Moreover, the submission of prompts to LLMs can inadvertently include customer data, thereby exposing organizations to potential breaches and compliance violations. The challenge lies in striking a balance between harnessing the capabilities of generative AI and ensuring that data remains protected at all times.

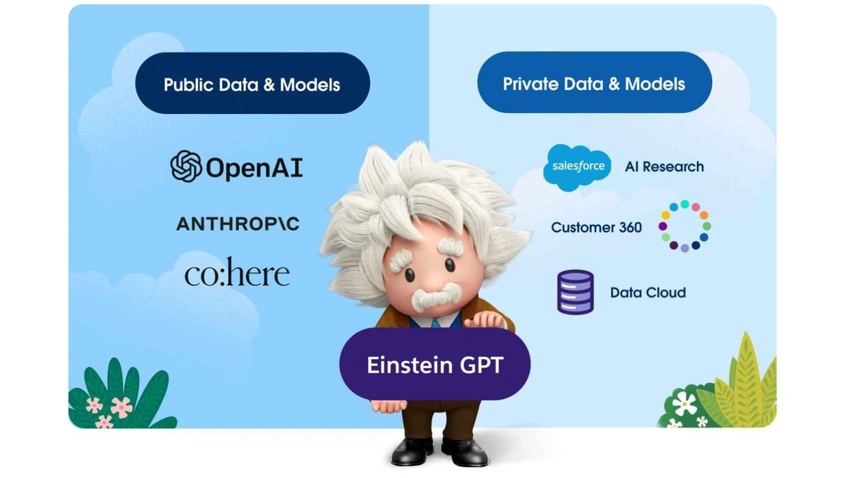

Introducing the Einstein GPT Trust Layer

Recognizing these challenges, Salesforce’s Einstein GPT Trust Layer steps onto the scene as a game-changing solution. This innovative layer of trust is meticulously engineered to provide enterprises with a comprehensive suite of features, assuring them that their data is secure, private, and compliant.

Zero Data Retention: Putting Control in Your Hands

One of the cornerstones of the Einstein GPT Trust Layer is zero data retention. This means that customer data is never stored outside the Salesforce ecosystem. When prompts are sent to the LLM, neither the prompts nor the responses are stored by third-party LLM providers for training purposes. This approach eliminates the risk of data leakage, ensuring that sensitive information remains within the trusted confines of your organization.

Encrypted Communications: A Secure Connection

Security is paramount in the digital age. The Einstein GPT Trust Layer employs encrypted communications over Transport Layer Security (TLS) to safeguard prompts transmitted to the LLM and the subsequent responses sent back to Salesforce. This cryptographic protection ensures that data remains confidential and tamper-proof during transmission.

Data Access Checks: Empowering Responsible AI Usage

To uphold data access controls, the Einstein GPT Trust Layer enforces stringent data access checks. When generating responses that involve customer data, the system ensures that only data explicitly allowed by the user’s permissions is incorporated into the prompts. This feature prevents unauthorized data inclusion, ensuring compliance with privacy regulations while maintaining the integrity of generated content.

Toxicity Checks and Bias Filters: Fostering Fairness and Respect

In a world increasingly aware of the impact of harmful content and biased language, the Einstein GPT Trust Layer goes a step further by incorporating toxicity checks and bias filters. These measures ensure that generated responses are devoid of toxic language and harmful biases, promoting respectful and inclusive interactions. By proactively addressing potential pitfalls, the trust layer contributes to a positive user experience while safeguarding brand reputation and values.

Feedback Store: Learning and Improving

The Einstein GPT Trust Layer is committed to driving continuous improvement in AI-generated interactions. The feedback store captures crucial data points, including the usefulness of generated responses and how they were ultimately received by service agents. This valuable feedback loop allows organizations to refine their prompts over time, enhancing the quality of AI-driven interactions and bolstering overall performance.

Audit Trail: Navigating Compliance with Confidence

Staying compliant with evolving regulations is a paramount concern for enterprises leveraging AI technologies. The Einstein GPT Trust Layer simplifies compliance by maintaining a comprehensive audit trail. This secure log records all interactions, prompts, outputs, and feedback data, empowering organizations to confidently deploy generative AI while adhering to regulatory requirements.

Conclusion

In the realm of generative AI, the Einstein GPT Trust Layer shines as a beacon of trust and security for enterprise companies. By addressing concerns surrounding data privacy, retention, and access, this innovative solution paves the way for the responsible and impactful adoption of generative AI technologies. With its robust suite of features, including zero data retention, encrypted communications, data access checks, a feedback store, and an audit trail, the Einstein GPT Trust Layer is poised to redefine the landscape of enterprise AI, enabling organizations to harness the power of AI-driven interactions while upholding the highest standards of data integrity and compliance.